Starting with Semantic Kernel to create an AI agent

The post offers practical examples, including setting up a ChatCompletionAgent using the Ollama model, managing conversation history, and implementing streaming responses for dynamic interactions.

When Semantic Kernel first came out I was excited. I tried it, but it turned out to be quite a hassle to get started. I also started to look at LangChain. This was (at that time) a lot more mature and I thought it was a lot easier to get started and I created my first AI application in a day. Happy days.

Since my first encounter with Semantic Kernel a lot has changed. Microsoft continued working on it and a lot of things got better and easier. So on my last holiday I decided to give Semantic Kernel another try to see if anything has changed. And things have really changed. It got a lot easier to get started with Semantic Kernel and AI.

In this blog I walk you through some of the basics.

Let's first start with a ChatCompletionAgent. ChatCompletion is basically the ability to have conversations with your LLM. You can prompt messages back and forth with your model in order to give it information, context, and even tasks. I basically consider this to be the nervous system of our AI application because, from this point, you can command it to do all kinds of tasks for you as long as you give it the tools to do the tasks. As you might see, the ChatCompletion needs a model for you to interact with. It's really important to consider the following things when choosing the right model for your ChatCompletion; otherwise, you might miss certain base capabilities you might need.

- What modalities does the model support (e.g., text, image, audio, etc.)?

- Does it support function calling?

- How fast does it receive and generate tokens?

- How much does each token cost?

The model you choose for your ChatCompletion will play a central role. With Semantic Kernel, you can choose from various extensions. For this post, I will use the Ollama model since it's free if you can run it locally. https://www.llama.com/ (Installation instructions: https://www.llama.com/docs/llama-everywhere/)

Open a new console application and add the dotnet package you see above. Next, adjust the code to look like this:

dotnet add package Microsoft.SemanticKernel.Connectors.Ollama --prerelease

Next change your code like so. This will create the Semantic Kernel and add the IChatCompletionService to your dependency container.

var kernel = Kernel.CreateBuilder()

.AddOllamaChatCompletion(modelId: model, endpoint: new Uri(endpoint))

.Build();

Now that is added lets get the ChatCompletionService from the container.

var chatCompletionService = kernel.GetRequiredService<IChatCompletionService>();

The next thing you need is something that will keep track of all your messages between you and your AI agent. For this, we need a Chat History object. This object will help the model keep track of the conversation and everything that has been said. Let's add that.

ChatHistory history = [];

history.AddUserMessage("what AI model are you?");We also add a question which we are gonna ask the model through the Semantic Kernel.

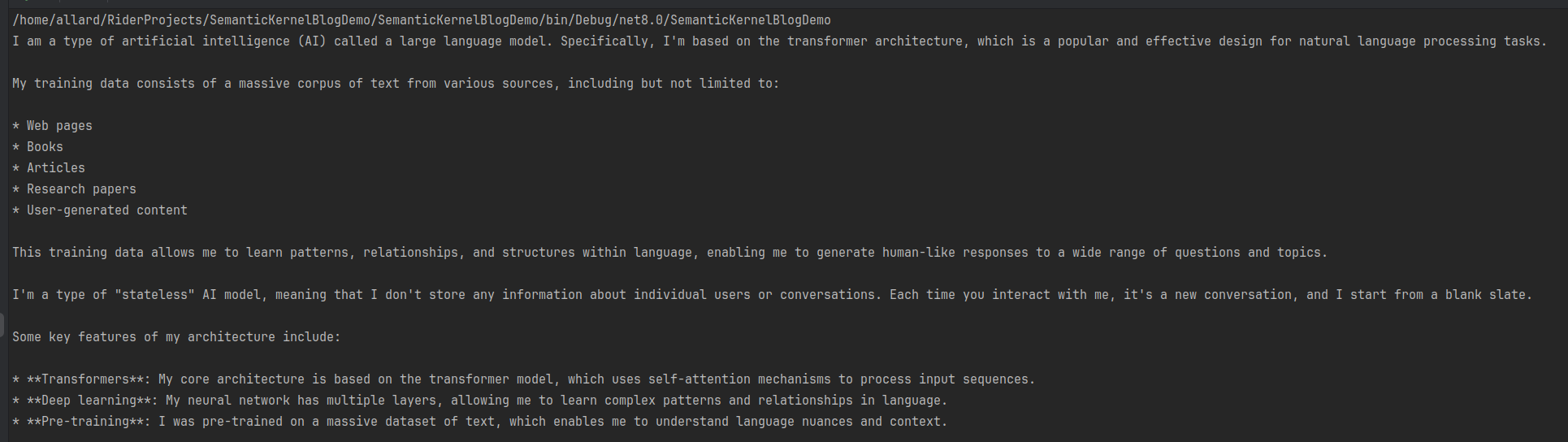

var response = await chatCompletionService.GetChatMessageContentAsync(

history,

kernel: kernel

);That's it. You can see that we will pass the created history object (along with our question) as a parameter into the GetChatMessageContentAsync method. Now, print the response to your console, but please be patient. Your model is processing your request. After a moment, it will show the response.

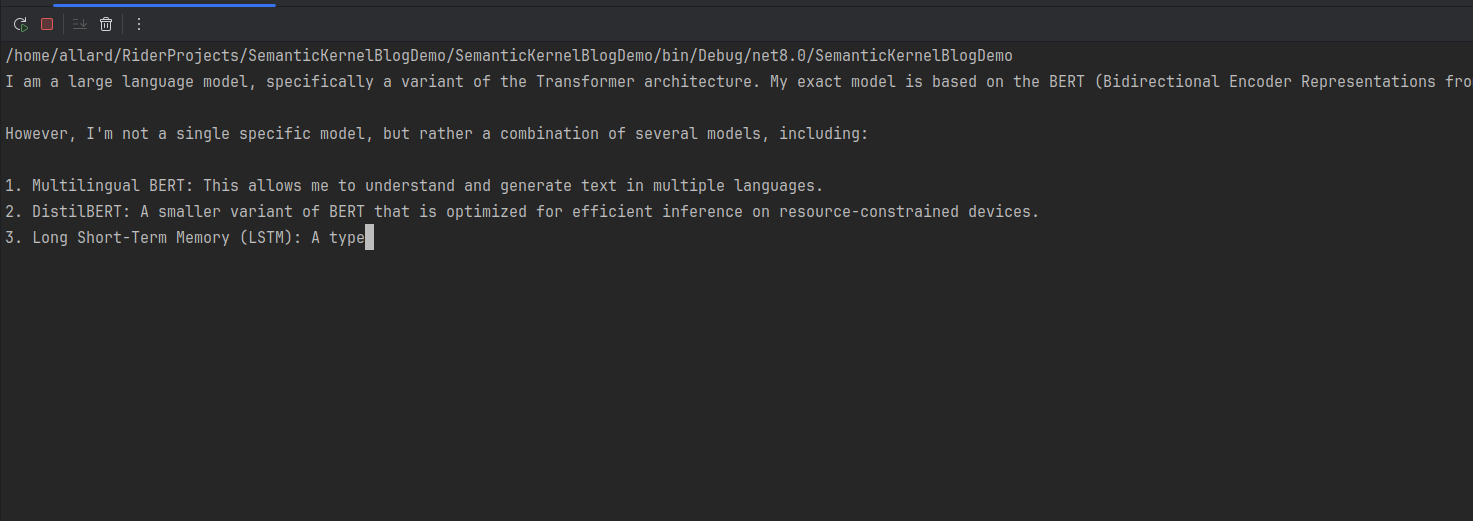

Of course, this is very basic, and currently, we have to wait a rather long time for its answer. The Ollama model and its extensions support streaming. We can also use that, and then we will see the answer being streamed slowly to our screen. Change the previous code block as follows and run the program again.

var response = chatCompletionService.GetStreamingChatMessageContentsAsync(

chatHistory: history,

kernel: kernel

);

await foreach (var chunk in response)

{

Console.Write(chunk);

}

Now we already have a functional AI agent. Lets turn the code a bit so that we can have an actual conversation

// With streaming

while (true)

{

Console.WriteLine("Enter your message (or 'exit' to quit): ");

string userMessage = Console.ReadLine() ?? string.Empty;

if (userMessage.Equals("exit", StringComparison.OrdinalIgnoreCase))

{

break;

}

history.AddUserMessage(userMessage);

var response = chatCompletionService.GetStreamingChatMessageContentsAsync(

chatHistory: history,

kernel: kernel

);

await foreach (var chunk in response)

{

Console.Write(chunk);

}

}

Next thing is we are gonna give it purpose. And we are entering some of the hardest things that you will encounter in working with AI models, which is prompt engineering.

Plugins

As mentioned earlier, we now have a functional AI Agent. However, we are now going to give it a specific purpose. We are going to configure this AI agent in such a way that it can perform specific tasks, and we will provide it with the necessary tools by feeding it additional information from external sources.

First, we need to define the LLM model's purpose. For this, we will utilize the ChatHistory object.

ChatHistory history = new ChatHistory("You are an ai assistant that will help me to plan in my excercise routine. " +

"I will ask you to help me to plan my exercise routine. " +

"Then you will give me a plan. I will ask you to change the plan and you will change it. " +

"You will not give me any other information than the one I asked for. ");Next, I will give this AI the ability to call an external API to retrieve weather conditions. Please pay close attention to the KernelFunction attribute and its Description. Be very clear and precise in your descriptions for both the KernelFunction attribute and its Description. The more detailed and accurate your descriptions are, the better the AI Model will understand when to utilize this function.

public class WeatherPlugin

{

private readonly HttpClient _httpClient;

public WeatherPlugin()

{

// Initialize any necessary resources or dependencies here

_httpClient = new HttpClient();

_httpClient.BaseAddress = new Uri("https://api.open-meteo.com/");

}

[KernelFunction("get_weather_temperature")]

[Description("Returns the weather temperature and its development in the next 24 hours")]

public async Task<string> GetWeatherTemperature()

{

var response = await _httpClient.GetAsync("v1/forecast?latitude=52.37&longitude=4.89&hourly=temperature_2m&models=knmi_seamless&forecast_days=1");

var jsonResponse = await response.Content.ReadAsStringAsync();

using var doc = System.Text.Json.JsonDocument.Parse(jsonResponse);

var root = doc.RootElement;

var hourly = root.GetProperty("hourly");

var times = hourly.GetProperty("time").EnumerateArray().Select(t => t.GetString()).ToArray();

var temperatures = hourly.GetProperty("temperature_2m").EnumerateArray().Select(t => t.GetDouble()).ToArray();

var temperatureUnit = root.GetProperty("hourly_units").GetProperty("temperature_2m").GetString();

var sb = new System.Text.StringBuilder();

sb.AppendLine("Weather forecast for the next 24 hours:");

sb.AppendLine();

for (var i = 0; i < times.Length; i++)

{

if (!DateTime.TryParse(times[i], out DateTime dateTime)) continue;

// Format time to show only the hour

var hourFormatted = dateTime.ToString("HH:00");

sb.AppendLine($"{hourFormatted}: {temperatures[i]}{temperatureUnit}");

}

return sb.ToString();

}

}Now, we need to inform the AI agent that it has an external API available that can call the WeatherApi to provide information about the weather conditions.

First, register the WeatherApi as follows:

var builder = Kernel.CreateBuilder()

.AddOllamaChatCompletion(modelId: model, endpoint: new Uri(endpoint));

// Add the weatherApi as a plugin

builder.Plugins.AddFromType<WeatherPlugin>();

var kernel = builder.Build();

Now that we have registered the plugin within the Semantic Kernel, the AI agent will automatically discover it and understand its capabilities through the KernelFunction and Description attributes. Therefore, to ensure the model operates correctly and effectively utilizes the plugin, it is crucial to be very clear and precise in your descriptions. You can find more information about function calling in Semantic Kernel here:https://learn.microsoft.com/en-us/semantic-kernel/concepts/ai-services/chat-completion/function-calling/?pivots=programming-language-csharp

You have the option to let the AI Agent decide autonomously when to use a plugin, or you can trigger it manually in your code. To enable the Agent to make these decisions on its own, you need to add the following to your code:

PromptExecutionSettings settings = new() { FunctionChoiceBehavior = FunctionChoiceBehavior.Auto() }; //Options are: Auto, None or Required

Furthermore, make a small modification to your GetStreamingChatMessageContentsAsync method call, and include the PromptExecutionSettings object as a parameter.

var response = chatCompletionService.GetStreamingChatMessageContentsAsync(

chatHistory: history,

settings,

kernel: kernel

);

When set to Auto, the AI Model will decide whether or not to utilize the Plugin based on the context of the conversation. Setting it to None will prevent the AI Model from ever calling the Plugin. Finally, setting it to Required will force the AI Model to always use the Plugin.

To manually invoke the Plugin, you can simply add the following call to your code:

// Call the WeatherPlugin function

var weatherTemperature = await kernel.Plugins.GetFunction(nameof(WeatherPlugin), "get_weather_temperature").InvokeAsync(kernel);

There is so much more to cover, but for now give this a try. And please play around with different prompts.

Sources

https://learn.microsoft.com/en-us/semantic-kernel/concepts/ai-services/chat-completion/?tabs=csharp-AzureOpenAI%2Cpython-AzureOpenAI%2Cjava-AzureOpenAI&pivots=programming-language-csharp

https://learn.microsoft.com/en-us/semantic-kernel/concepts/ai-services/chat-completion/function-calling/?pivots=programming-language-csharp

https://www.llama.com/docs/llama-everywhere/

Comments ()